vLLM Now Supports Qwen3-Next: Hybrid Architecture with Extreme Efficiency

We’re excited to announce that vLLM now supports Qwen3-Next, the latest generation of foundation models from the Qwen team. Qwen3-Next introduces a hybrid architecture with extreme efficiency for long context support, and vLLM offers full support of its functionalities.

In this post, we’ll explore Qwen3-Next’s innovations — hybrid attention, high-sparsity MoE, and multi-token prediction — and show how vLLM efficiently supports them.

Quickstart

You can run Qwen3-Next with vLLM nightly installation:

uv pip install vllm --extra-index-url https://wheels.vllm.ai/nightly --torch-backend=auto

Then launch:

vllm serve Qwen/Qwen3-Next-80B-A3B-Instruct -tp 4

Please refer to the vLLM Model Recipes for a more detailed installation and usage guide.

Hybrid Attention: Efficient Context Modeling

At the core of Qwen3-Next is its Hybrid Attention design, replacing standard attention with a combination of:

- Gated DeltaNet (linear attention for long context efficiency)

- Full Attention (full attention for high-fidelity reasoning)

The model interleaves these two forms of attention across layers, enabling efficient scaling to 65K context lengths and beyond.

To support this, vLLM integrates Triton kernels from Flash Linear Attention, and adopts a hybrid KV cache manager to manage both linear and full attention layers, avoiding fragmentation and maximizing GPU utilization.

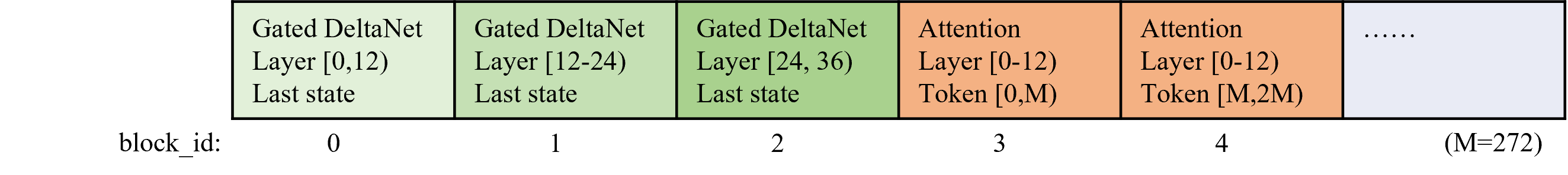

In order to manage state for hybrid models like Qwen3-Next, vLLM automatically tunes the “logical” block size of the full attention layers to ensure that the state for the full attention layers and linear attention layers occupy the same amount of “physical” GPU memory. This enables simple and efficient paged memory management for hybrid models, increasing throughput for heavy workloads when the GPU memory becomes fully utilized.

In addition, Flash Linear Attention is based on Triton. Launching Triton kernels can incur significant CPU overheads that disproportionately affect decode-only batches. To overcome this, vLLM enables full CUDA graph mode by default, ensuring good performance in low-latency scenarios.

High-Sparsity MoE: Extreme Efficiency

Qwen3-Next pushes sparsity further with MoE layers at a 1:50 activation ratio. In the flagship 80B-A3B model, only 3B parameters are active per token. vLLM can have great throughput and latency with the built-in efficient MoE implementation.

Multi-Token Prediction (MTP)

Another innovation in Qwen3-Next is multi-token prediction, which boosts both pretraining efficiency and inference speed. vLLM natively supports this mode, allowing Qwen3-Next to decode multiple tokens per step without modifying application code. See the recipe to check out how to use it.

Looking Ahead

Our Qwen3-Next integration is just the beginning. On the roadmap:

- Further kernel optimizations for GatedDeltaNet layers.

- Better memory management, plus the support of automatic prefix caching and P/D disaggregation for hybrid models.

- Continuous throughput and CPU overhead reductions.

Acknowledgements

This effort was made possible thanks to close collaboration with many partners:

- Qwen Team, including Tao He, Jianwei Zhang, for open-sourcing the model.

- Flash Linear Attention team, including Yu Zhang, etc. for reviewing the gated deltanet attention kernels and improving the numerics.

- NVIDIA, including Vadim Gimpelson for testing the models.

- IBM Research, including Thomas Parnell for hybrid memory management and CUDA graph optimizations.

- Red Hat, including Tyler Michael Smith, Doug Smith, Tarun Kumar, and Elvir Crncevic for testing the model and tuning MoE kernels.

- Community partners: Meta, Roblox, etc. for testing, feedback, and scaling insights.

vLLM team members who contributed to this effort include: Jie Li, Kaichao You, Chen Zhang, Simon Mo.

👉 Qwen3-Next is now available in vLLM. Try it out today and experience ultra-efficient long-context inference with the latest hybrid MoE architecture.