Token-Level Truth: Real-Time Hallucination Detection for Production LLMs

Your LLM just called a tool, received accurate data, and still got the answer wrong. Welcome to the world of extrinsic hallucination—where models confidently ignore the ground truth sitting right in front of them.

Building on our Signal-Decision Architecture, we introduce HaluGate—a conditional, token-level hallucination detection pipeline that catches unsupported claims before they reach your users. No LLM-as-judge. No Python runtime. Just fast, explainable verification at the point of delivery.

The Problem: Hallucinations Block Production Deployment

Hallucinations have become the single biggest barrier to deploying LLMs in production. Across industries—legal (fabricated case citations), healthcare (incorrect drug interactions), finance (invented financial data), customer service (non-existent policies)—the pattern is the same: AI generates plausible-sounding content that appears authoritative but crumbles under scrutiny.

The challenge isn’t obvious nonsense. It’s subtle fabrications embedded in otherwise accurate responses—errors that require domain expertise or external verification to catch. For enterprises, this uncertainty makes LLM deployment a liability rather than an asset.

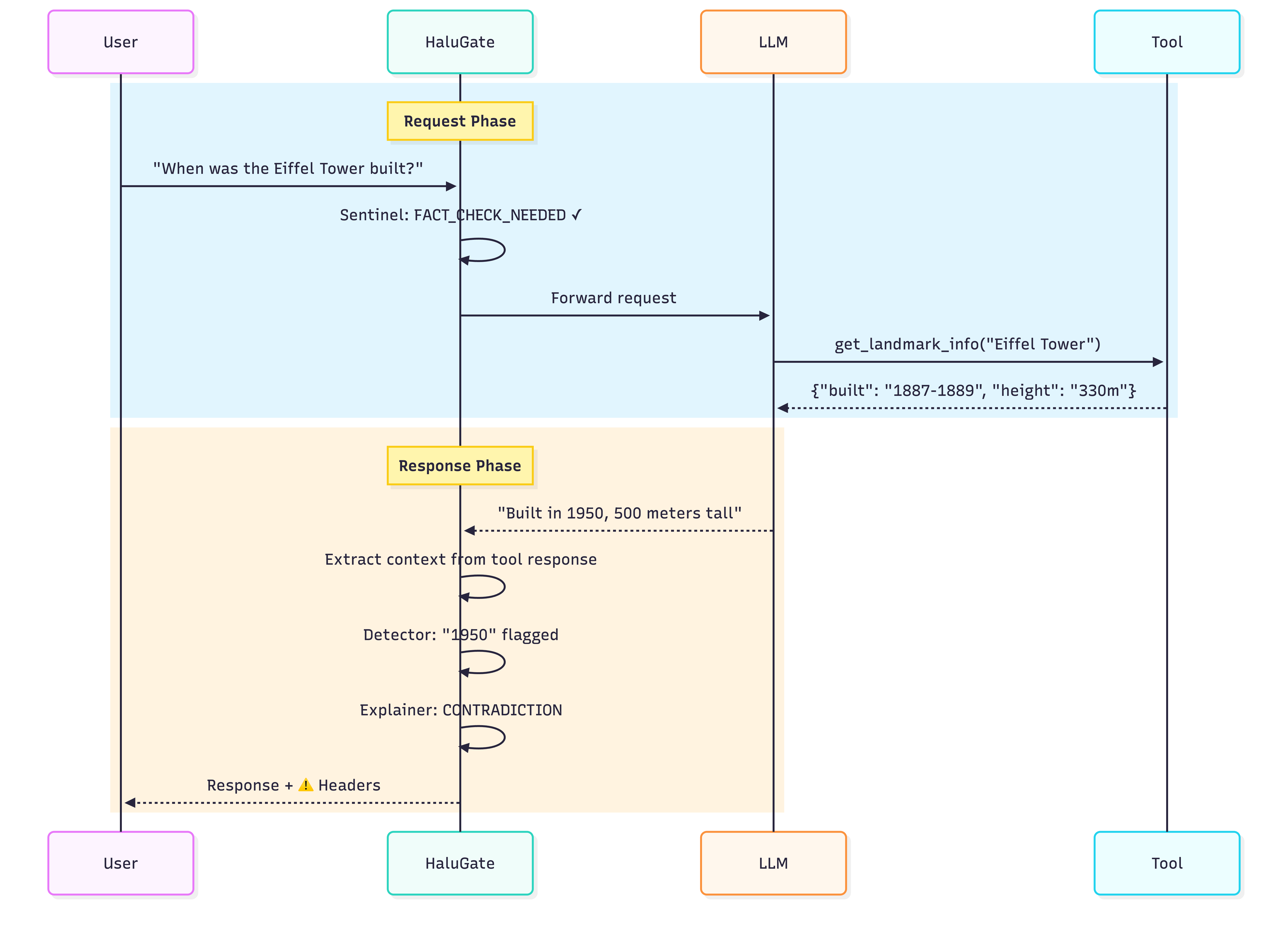

The Scenario: When Tools Work But Models Don’t

Let’s make this concrete. Consider a typical function-calling interaction:

User: “When was the Eiffel Tower built?”

Tool Call:

get_landmark_info("Eiffel Tower")Tool Response:

{"name": "Eiffel Tower", "built": "1887-1889", "height": "330 meters", "location": "Paris, France"}LLM Response: “The Eiffel Tower was built in 1950 and stands at 500 meters tall in Paris, France.”

The tool returned correct data. The model’s response contains facts. But two of those “facts” are fabricated—extrinsic hallucinations that directly contradict the provided context.

This failure mode is particularly insidious:

- Users trust it because they see the tool was called

- Traditional filters miss it because there’s no toxic or harmful content

- Evaluation is expensive if you rely on another LLM to judge

What if we could detect these errors automatically, in real-time, with millisecond latency?

The Insight: Function Calling as Ground Truth

Here’s the key realization: modern function-calling APIs already provide grounding context. When users ask factual questions, models call tools—database lookups, API calls, document retrieval. These tool results are semantically equivalent to retrieved documents in RAG.

We don’t need to build separate retrieval infrastructure. We don’t need to call GPT-4 as a judge. We extract three components from the existing API flow:

| Component | Source | Purpose |

|---|---|---|

| Context | Tool message content | Ground truth for verification |

| Question | User message | Intent understanding |

| Answer | Assistant response | Claims to verify |

The question becomes: Is the answer faithful to the context?

Why Not Just Use LLM-as-Judge?

The obvious solution—call another LLM to verify—has fundamental problems in production:

| Approach | Latency | Cost | Explainability |

|---|---|---|---|

| GPT-4 as judge | 2-5 seconds | $0.01-0.03/request | Low (black box) |

| Local LLM judge | 500ms-2s | GPU compute | Low |

| HaluGate | 76-162ms | CPU only | High (token-level + NLI) |

LLM judges also suffer from:

- Position bias: Tendency to favor certain answer positions

- Verbosity bias: Longer answers rated higher regardless of accuracy

- Self-preference: Models favor outputs similar to their own style

- Inconsistency: Same input can yield different judgments

We needed something faster, cheaper, and more explainable.

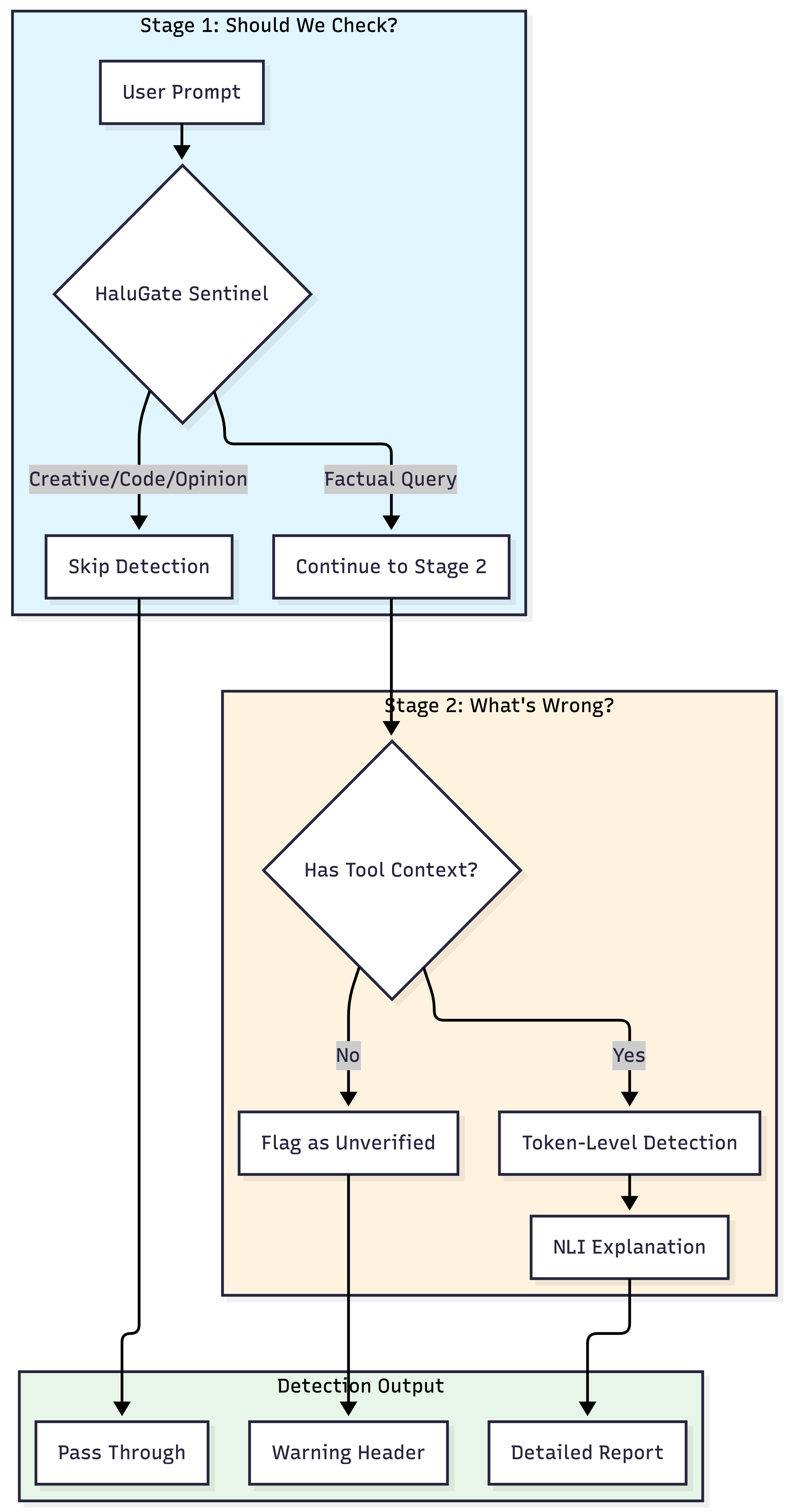

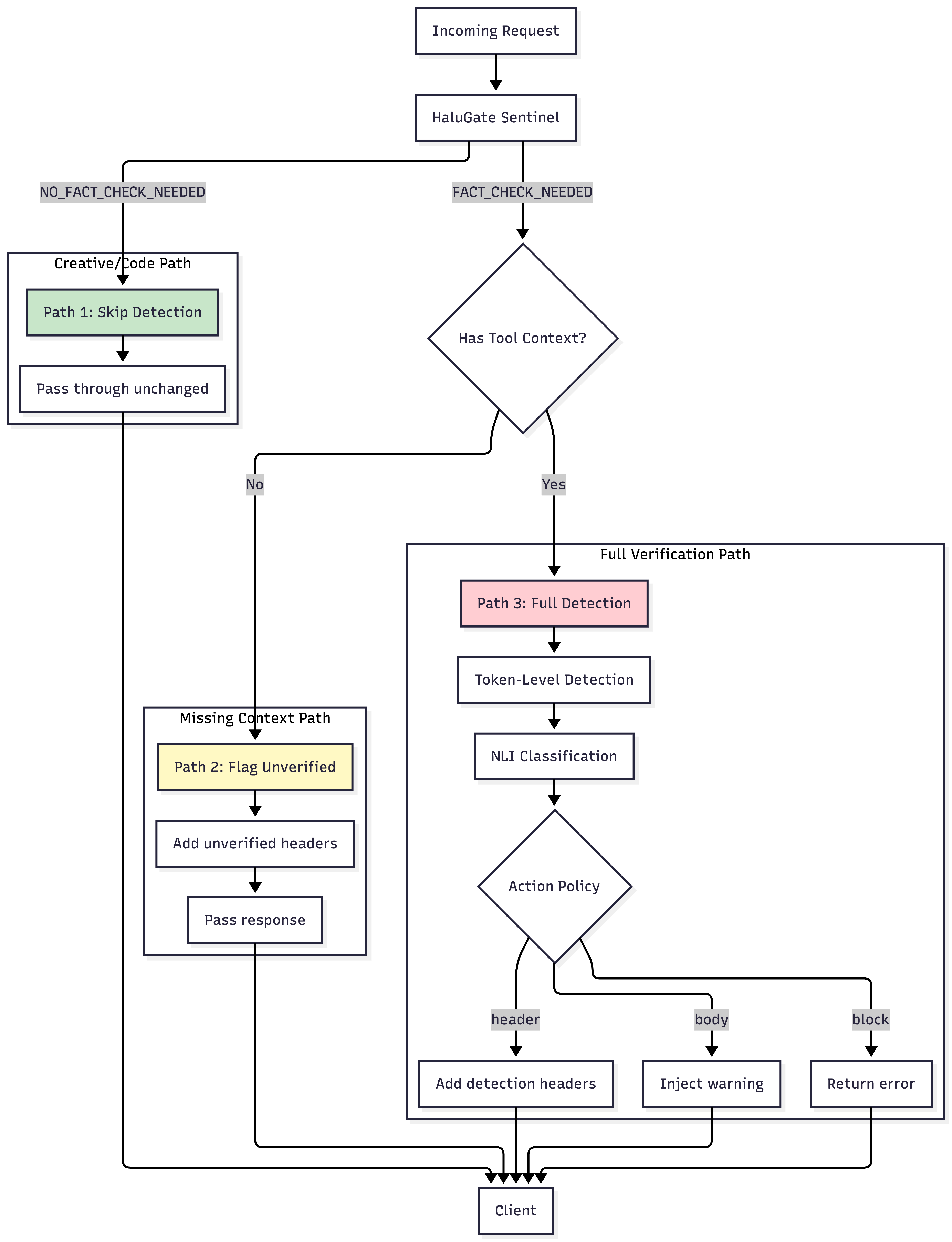

HaluGate: A Two-Stage Detection Pipeline

HaluGate implements a conditional two-stage pipeline that balances efficiency with precision:

Stage 1: HaluGate Sentinel (Prompt Classification)

Not every query needs hallucination detection. Consider these prompts:

| Prompt | Needs Fact-Check? | Reason |

|---|---|---|

| “When was Einstein born?” | ✅ Yes | Verifiable fact |

| “Write a poem about autumn” | ❌ No | Creative task |

| “Debug this Python code” | ❌ No | Technical assistance |

| “What’s your opinion on AI?” | ❌ No | Opinion request |

| “Is the Earth round?” | ✅ Yes | Factual claim |

Running token-level detection on creative writing or code review is wasteful—and potentially produces false positives (“your poem contains unsupported claims!”).

Why pre-classification matters: Token-level detection scales linearly with context length. For a 4K token RAG context, detection takes ~125ms; for 16K tokens, ~365ms. In production workloads where ~35% of queries are non-factual, pre-classification achieves a 72.2% efficiency gain—skipping expensive detection entirely for creative, coding, and opinion queries.

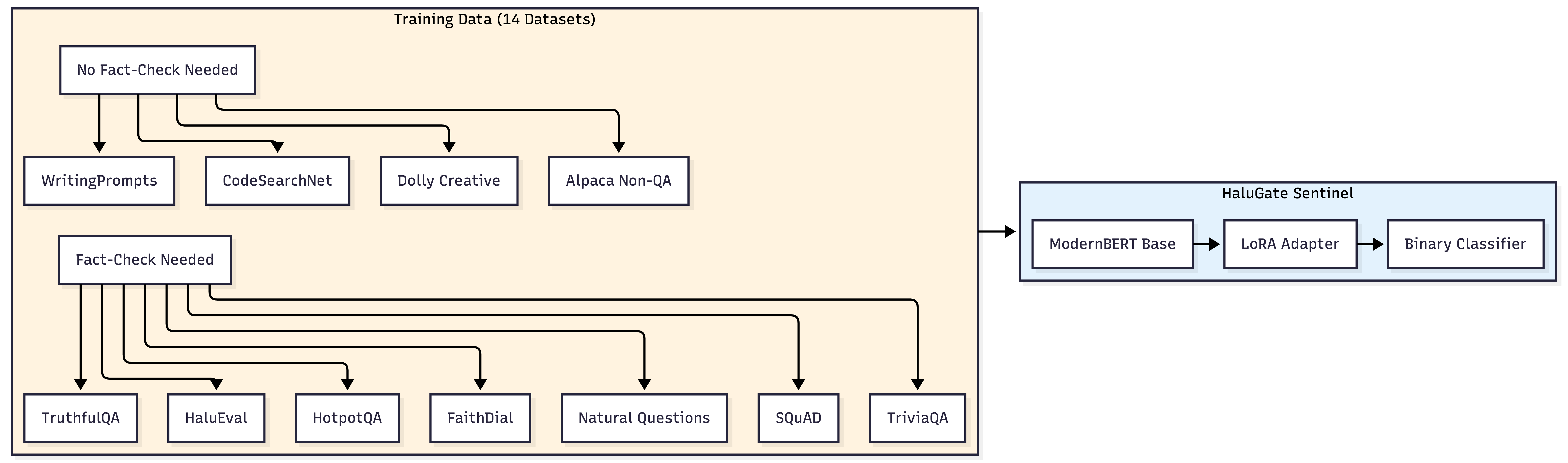

HaluGate Sentinel is a ModernBERT-based classifier that answers one question: Does this prompt warrant factual verification?

The model is trained on a carefully curated mix of:

Fact-Check Needed (Positive Class):

- Question Answering: SQuAD, TriviaQA, Natural Questions, HotpotQA

- Truthfulness: TruthfulQA (common misconceptions)

- Hallucination Benchmarks: HaluEval, FactCHD

- Information-Seeking Dialogue: FaithDial, CoQA

- RAG Datasets: neural-bridge/rag-dataset-12000

No Fact-Check Needed (Negative Class):

- Creative Writing: WritingPrompts, story generation

- Code: CodeSearchNet docstrings, programming tasks

- Opinion/Instruction: Dolly non-factual, Alpaca creative

This binary classification achieves 96.4% validation accuracy with ~12ms inference latency via native Rust/Candle integration.

Stage 2: Token-Level Detection + NLI Explanation

For prompts classified as fact-seeking, we run a two-model detection pipeline.

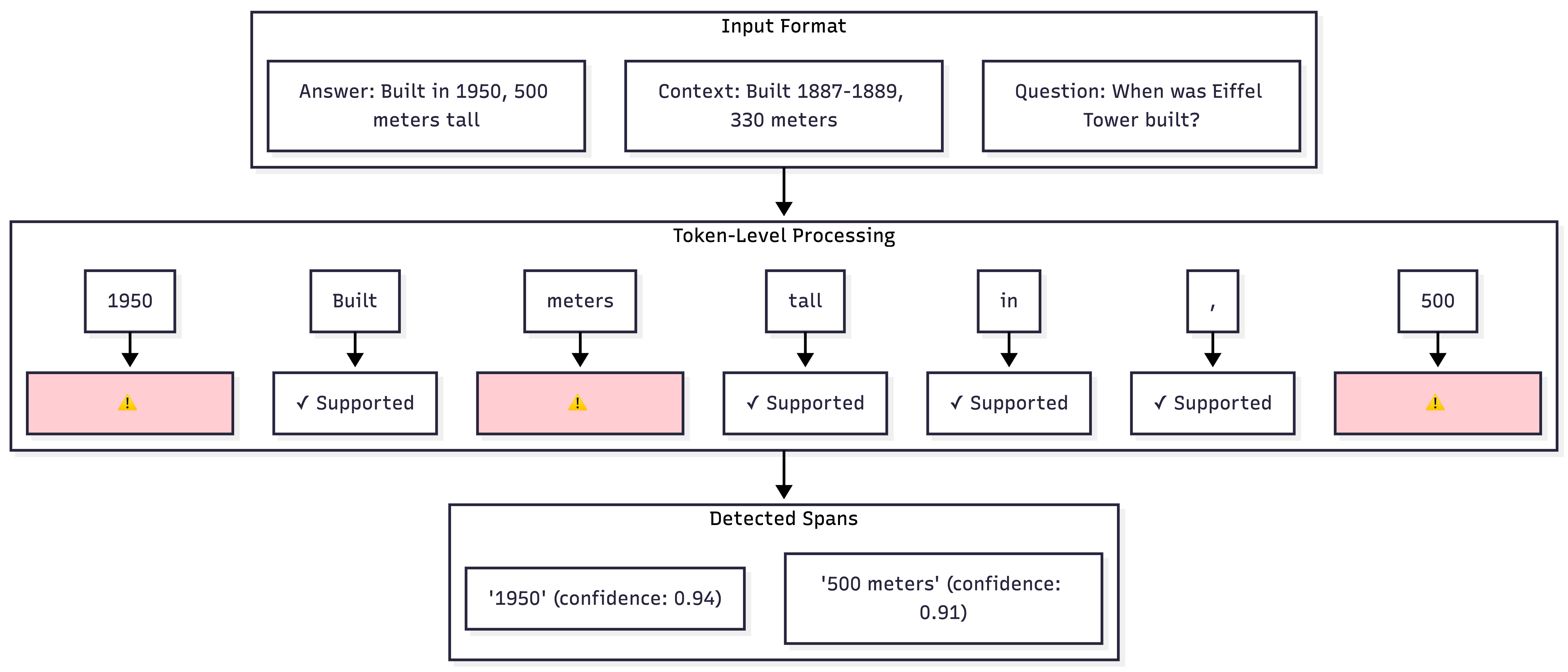

Token-Level Hallucination Detection

Unlike sentence-level classifiers that output a single “hallucinated/not hallucinated” label, token-level detection identifies exactly which tokens are unsupported by the context.

The model architecture:

Input: [CLS] context [SEP] question [SEP] answer [SEP]

↓

ModernBERT Encoder

↓

Token Classification Head (Binary per token)

↓

Label: 0 = Supported, 1 = Hallucinated (for answer tokens only)

Key design decisions:

- Answer-only classification: We only classify tokens in the answer segment, not context or question

- Span merging: Consecutive hallucinated tokens are merged into spans for readability

- Confidence thresholding: Configurable threshold (default 0.8) to balance precision/recall

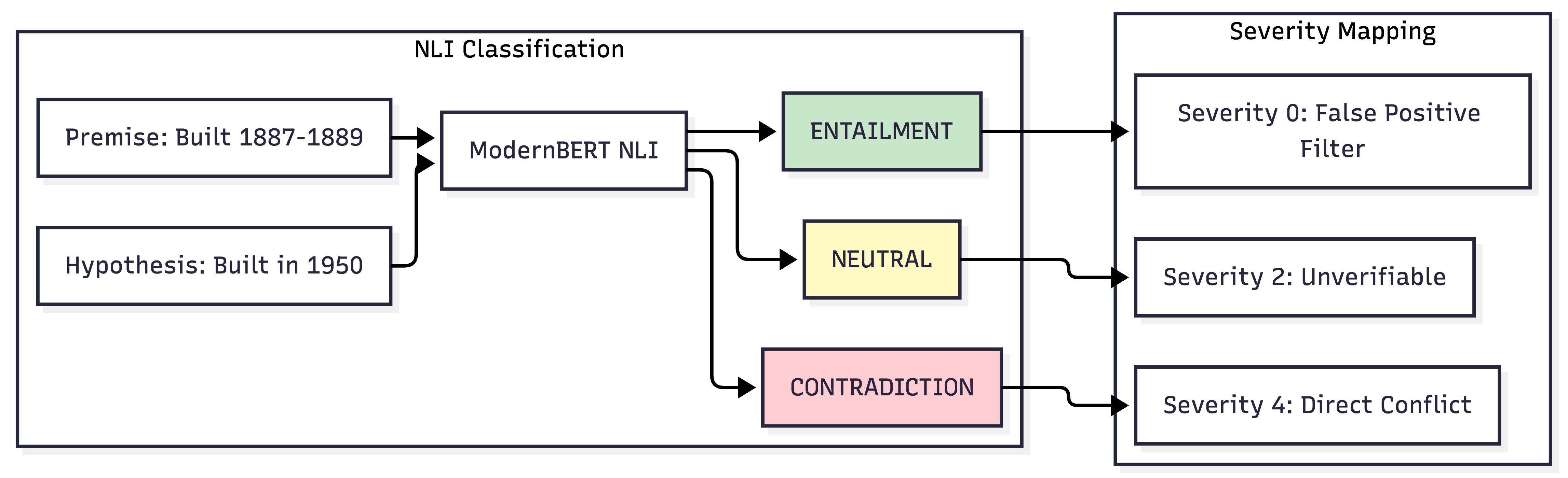

NLI Explanation Layer

Knowing that something is hallucinated isn’t enough—we need to know why. The NLI (Natural Language Inference) model classifies each detected span against the context:

| NLI Label | Meaning | Severity | Action |

|---|---|---|---|

| CONTRADICTION | Claim conflicts with context | 4 (High) | Flag as error |

| NEUTRAL | Claim not supported by context | 2 (Medium) | Flag as unverifiable |

| ENTAILMENT | Context supports the claim | 0 | Filter false positive |

Why the ensemble works: Token-level detection alone achieves only 59% F1 on the hallucinated class—nearly half of hallucinations are missed, and one-third of flags are false positives. We experimented with training a unified 5-class model (SUPPORTED/CONTRADICTION/FABRICATION/etc.) but it achieved only 21.7% F1—token-level classification simply cannot distinguish why something is wrong. The two-stage approach turns a mediocre detector into an actionable system: LettuceDetect provides recall (catching potential issues), while NLI provides precision (filtering false positives) and explainability (categorizing why each span is problematic).

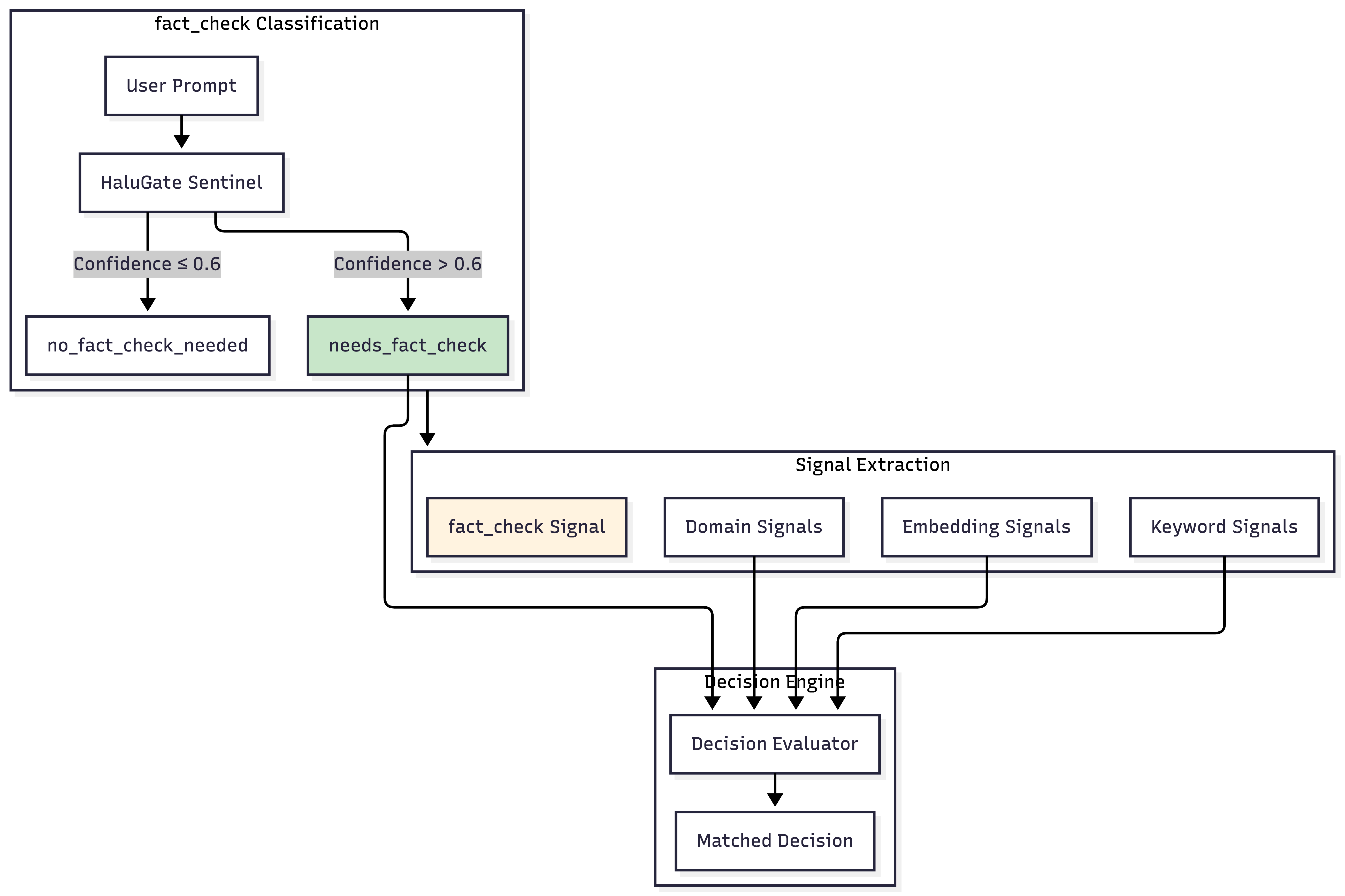

Integration with Signal-Decision Architecture

HaluGate doesn’t operate in isolation—it’s deeply integrated with our Signal-Decision Architecture as a new signal type and plugin.

fact_check as a Signal Type

Just as we have keyword, embedding, and domain signals, fact_check is now a first-class signal type:

This allows decisions to be conditioned on whether the query is fact-seeking:

Note: Even frontier models show hallucination variance between releases. For example, GPT-5.2’s system card demonstrates measurable hallucination delta compared to previous versions, highlighting the importance of continuous verification regardless of model sophistication.

decisions:

- name: "factual-query-with-verification"

priority: 100

rules:

operator: "AND"

conditions:

- type: "fact_check"

name: "needs_fact_check"

- type: "domain"

name: "general"

plugins:

- type: "hallucination"

configuration:

enabled: true

use_nli: true

hallucination_action: "header"

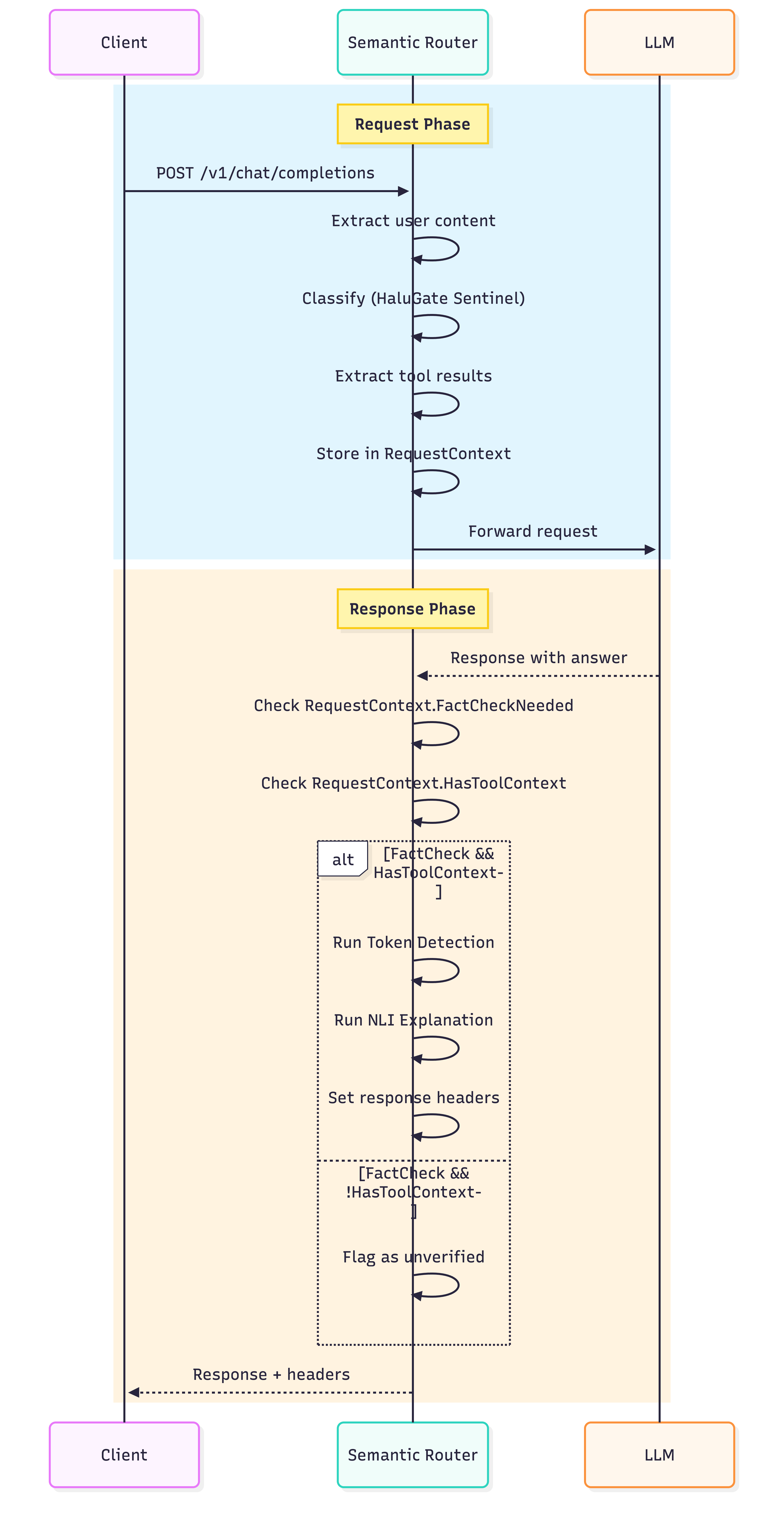

Request-Response Context Propagation

A key challenge: the classification happens at request time, but detection happens at response time. We need to propagate state across this boundary.

The RequestContext structure carries all necessary state:

RequestContext:

# Classification results (set at request time)

FactCheckNeeded: true

FactCheckConfidence: 0.87

# Tool context (extracted at request time)

HasToolsForFactCheck: true

ToolResultsContext: "Built 1887-1889, 330 meters..."

UserContent: "When was the Eiffel Tower built?"

# Detection results (set at response time)

HallucinationDetected: true

HallucinationSpans: ["1950", "500 meters"]

HallucinationConfidence: 0.92

The hallucination Plugin

The hallucination plugin is configured per-decision, allowing fine-grained control:

plugins:

- type: "hallucination"

configuration:

enabled: true

use_nli: true # Enable NLI explanations

# Action when hallucination detected

hallucination_action: "header" # "header" | "body" | "block" | "none"

# Action when fact-check needed but no tool context

unverified_factual_action: "header"

# Include detailed info in response

include_hallucination_details: true

| Action | Behavior |

|---|---|

header |

Add warning headers, pass response through |

body |

Inject warning into response body |

block |

Return error response, don’t forward LLM output |

none |

Log only, no user-visible action |

Response Headers: Actionable Transparency

Detection results are communicated via HTTP headers, enabling downstream systems to implement custom policies:

HTTP/1.1 200 OK

Content-Type: application/json

x-vsr-fact-check-needed: true

x-vsr-hallucination-detected: true

x-vsr-hallucination-spans: 1950; 500 meters

x-vsr-nli-contradictions: 2

x-vsr-max-severity: 4

For unverified factual responses (when tools aren’t available):

HTTP/1.1 200 OK

x-vsr-fact-check-needed: true

x-vsr-unverified-factual-response: true

x-vsr-verification-context-missing: true

These headers enable:

- UI Disclaimers: Show warnings to users when confidence is low

- Human Review Queues: Route flagged responses for manual review

- Audit Logging: Track unverified claims for compliance

- Conditional Blocking: Block high-severity contradictions

The Complete Pipeline: Three Paths

| Path | Condition | Latency Added | Action |

|---|---|---|---|

| Path 1 | Non-factual prompt | ~12ms (classifier only) | Pass through |

| Path 2 | Factual + No tools | ~12ms | Add warning headers |

| Path 3 | Factual + Tools available | 76-162ms | Full detection + headers |

Model Architecture Deep Dive

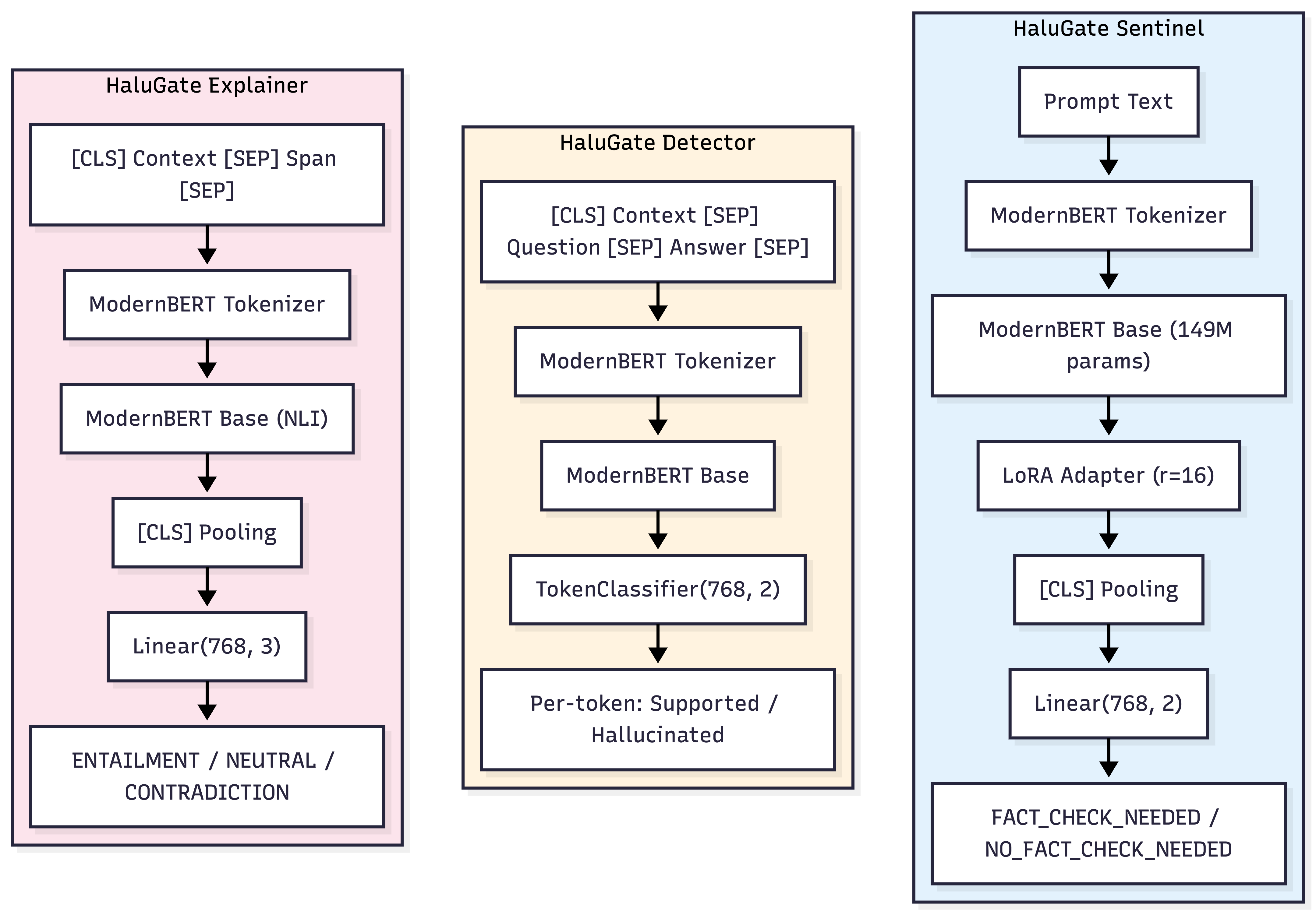

Let’s look at the three models that power HaluGate:

HaluGate Sentinel: Binary Prompt Classification

Architecture: ModernBERT-base + LoRA adapter + binary classification head

Training:

- Base Model:

answerdotai/ModernBERT-base - Fine-tuning: LoRA (rank=16, alpha=32, dropout=0.1)

- Training Data: 50,000 samples from 14 datasets

- Loss: CrossEntropy with class weights (handle imbalance)

- Optimization: AdamW, lr=2e-5, 3 epochs

Inference:

- Input: Raw prompt text

- Output: (class_id, confidence)

- Latency: ~12ms on CPU

The LoRA approach allows efficient fine-tuning while preserving the pretrained knowledge. Only 2.2% of parameters (3.4M out of 149M) are updated during training.

HaluGate Detector: Token-Level Binary Classification

Architecture: ModernBERT-base + token classification head

Input Format:

[CLS] The Eiffel Tower was built in 1887-1889 and is 330 meters tall.

[SEP] When was the Eiffel Tower built?

[SEP] The Eiffel Tower was built in 1950 and is 500 meters tall. [SEP]

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

Answer tokens (classification targets)

Output: Binary label (0=Supported, 1=Hallucinated) for each answer token

Post-processing:

- Filter predictions to answer segment only

- Apply confidence threshold (default: 0.8)

- Merge consecutive hallucinated tokens into spans

- Return spans with confidence scores

HaluGate Explainer: Three-Way NLI Classification

Architecture: ModernBERT-base fine-tuned on NLI

Input Format:

[CLS] The Eiffel Tower was built in 1887-1889. [SEP] built in 1950 [SEP]

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ ^^^^^^^^^^^^^^^

Premise (context) Hypothesis (span)

Output: Three-way classification with confidence:

- ENTAILMENT (0): Context supports the claim

- NEUTRAL (1): Cannot be determined from context

- CONTRADICTION (2): Context conflicts with claim

Severity Mapping:

| NLI Label | Severity Score | Interpretation |

|---|---|---|

| ENTAILMENT | 0 | Likely false positive—filter out |

| NEUTRAL | 2 | Claim is unverifiable |

| CONTRADICTION | 4 | Direct factual error |

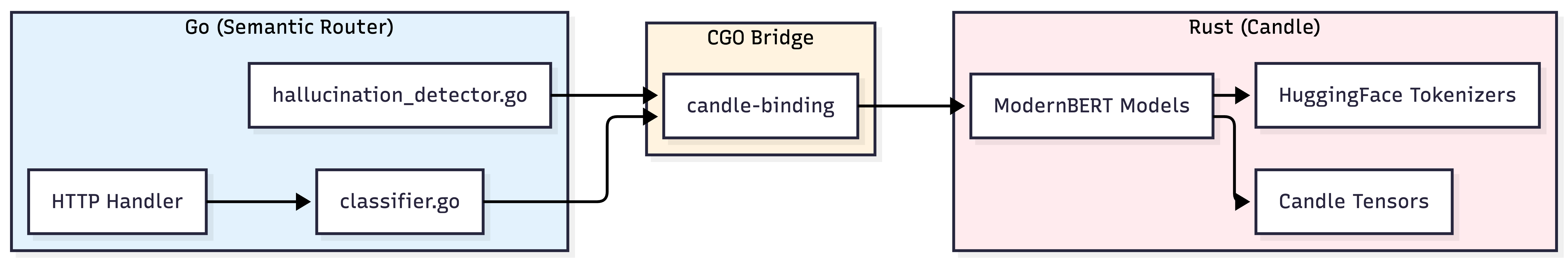

Why Native Rust/Candle Matters

All three models run natively via Candle (Hugging Face’s Rust ML framework) with CGO bindings to Go:

Benefits of this approach:

| Aspect | Python (PyTorch) | Native (Candle) |

|---|---|---|

| Cold start | 5-10s | <500ms |

| Memory | 2-4GB per model | 500MB-1GB per model |

| Latency | +50-100ms overhead | Near-zero overhead |

| Deployment | Python runtime required | Single binary |

| Scaling | GIL contention | True parallelism |

This eliminates the need for a separate Python service, sidecars, or model servers—everything runs in-process.

Latency Breakdown

Here’s the measured latency for each component in the production pipeline:

| Component | P50 | P99 | Notes |

|---|---|---|---|

| Fact-check classifier | 12ms | 28ms | ModernBERT inference |

| Tool context extraction | 1ms | 3ms | JSON parsing |

| Hallucination detector | 45ms | 89ms | Token classification |

| NLI explainer | 18ms | 42ms | Per-span classification |

| Total overhead | 76ms | 162ms | When detection runs |

The total overhead (76-162ms) is negligible compared to typical LLM generation times (5-30 seconds), making HaluGate practical for synchronous request processing.

Configuration Reference

Complete configuration for hallucination mitigation:

# Model configuration

hallucination_mitigation:

# Stage 1: Prompt classification

fact_check_model:

model_id: "models/halugate-sentinel"

threshold: 0.6 # Confidence threshold for FACT_CHECK_NEEDED

use_cpu: true

# Stage 2a: Token-level detection

hallucination_model:

model_id: "models/halugate-detector"

threshold: 0.8 # Token confidence threshold

use_cpu: true

# Stage 2b: NLI explanation

nli_model:

model_id: "models/halugate-explainer"

threshold: 0.9 # NLI confidence threshold

use_cpu: true

# Signal rules for fact-check classification

fact_check_rules:

- name: needs_fact_check

description: "Query contains factual claims that should be verified"

- name: no_fact_check_needed

description: "Query is creative, code-related, or opinion-based"

# Decision with hallucination plugin

decisions:

- name: "verified-factual"

priority: 100

rules:

operator: "AND"

conditions:

- type: "fact_check"

name: "needs_fact_check"

plugins:

- type: "hallucination"

configuration:

enabled: true

use_nli: true

hallucination_action: "header"

unverified_factual_action: "header"

include_hallucination_details: true

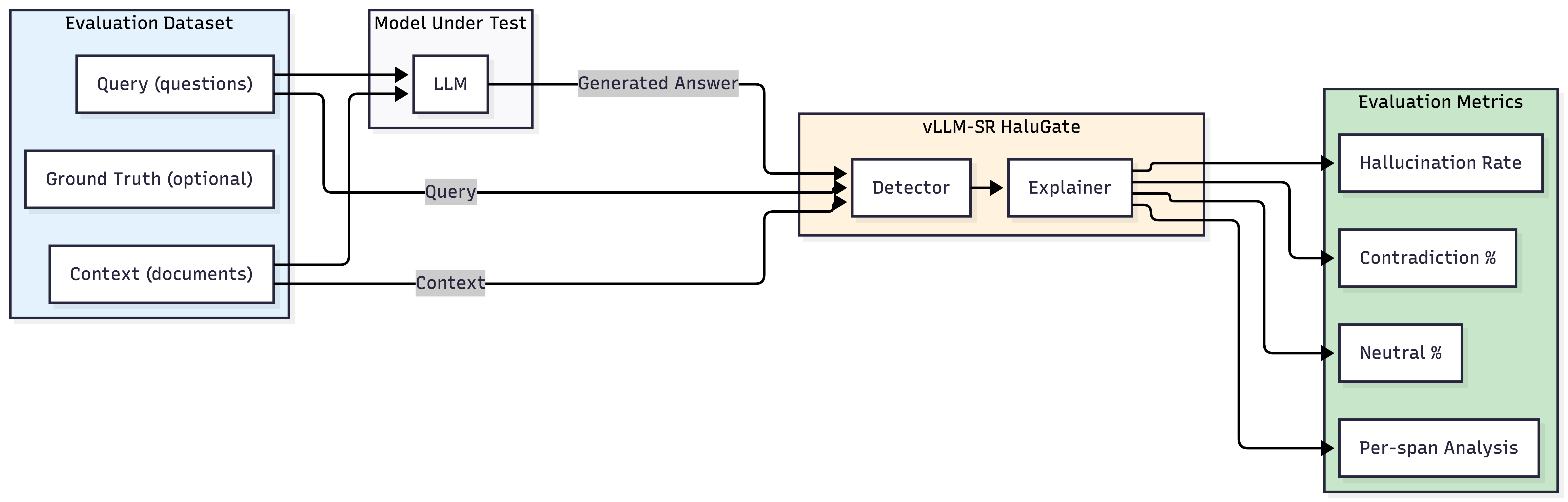

Beyond Production: HaluGate as an Evaluation Framework

While HaluGate is designed for real-time production use, the same pipeline can power offline model evaluation. Instead of intercepting live requests, we feed benchmark datasets through the detection pipeline to systematically measure hallucination rates across models.

Evaluation Workflow

The evaluation framework treats HaluGate as a hallucination scorer:

- Load Dataset: Use existing QA/RAG benchmarks (TriviaQA, Natural Questions, HotpotQA) or custom enterprise datasets with context-question pairs

- Generate Responses: Run the model under test against each query with provided context

- Detect Hallucinations: Pass (context, query, response) triples through HaluGate Detector

- Classify Severity: Use HaluGate Explainer to categorize each flagged span

- Aggregate Metrics: Compute hallucination rates, contradiction ratios, and per-category breakdowns

Limitations and Scope

HaluGate specifically targets extrinsic hallucinations—where tool/RAG context provides grounding for verification. It has known limitations:

What HaluGate Cannot Detect

| Limitation | Example | Reason |

|---|---|---|

| Intrinsic hallucinations | Model says “Einstein was born in 1900” without any tool call | No context to verify against |

| No-context scenarios | User asks factual question, no tools defined | Missing ground truth |

Transparent Degradation

For requests classified as fact-seeking but lacking tool context, we explicitly flag responses as “unverified factual” rather than silently passing them through:

x-vsr-fact-check-needed: true

x-vsr-unverified-factual-response: true

x-vsr-verification-context-missing: true

This transparency allows downstream systems to handle uncertainty appropriately.

Acknowledgments

HaluGate builds on excellent work from the research community:

- Token-level detection architecture: Inspired by LettuceDetect from KRLabs—pioneering work in ModernBERT-based hallucination detection

- NLI models: Built on tasksource/ModernBERT-base-nli—high-quality NLI fine-tuning

- Training datasets: TruthfulQA, HaluEval, FaithDial, RAGTruth, and other publicly available benchmarks

We’re grateful to these teams for advancing the field of hallucination detection.

Conclusion

HaluGate brings principled hallucination detection to production LLM deployments:

- Conditional verification: Skip non-factual queries, verify factual ones

- Token-level precision: Know exactly which claims are unsupported

- Explainable results: NLI classification tells you why something is wrong

- Zero-latency integration: Native Rust inference, no Python sidecars

- Actionable transparency: Headers enable downstream policy enforcement

The next time your LLM calls a tool, receives accurate data, and still gets the answer wrong—HaluGate will catch it before your users do.

Resources:

- Signal-Decision Architecture Blog

- vLLM Semantic Router GitHub Repo

- vLLM Semantic Router Documentation

Join the discussion: Share your use cases and feedback in #semantic-router channel on vLLM Slack