Announcing vllm.ai Website and Some Community Updates

For a long time, vllm.ai simply redirected to the vLLM GitHub page. Thanks to our community, we now have a brand-new vllm.ai website, drawing inspiration from the PyTorch website.

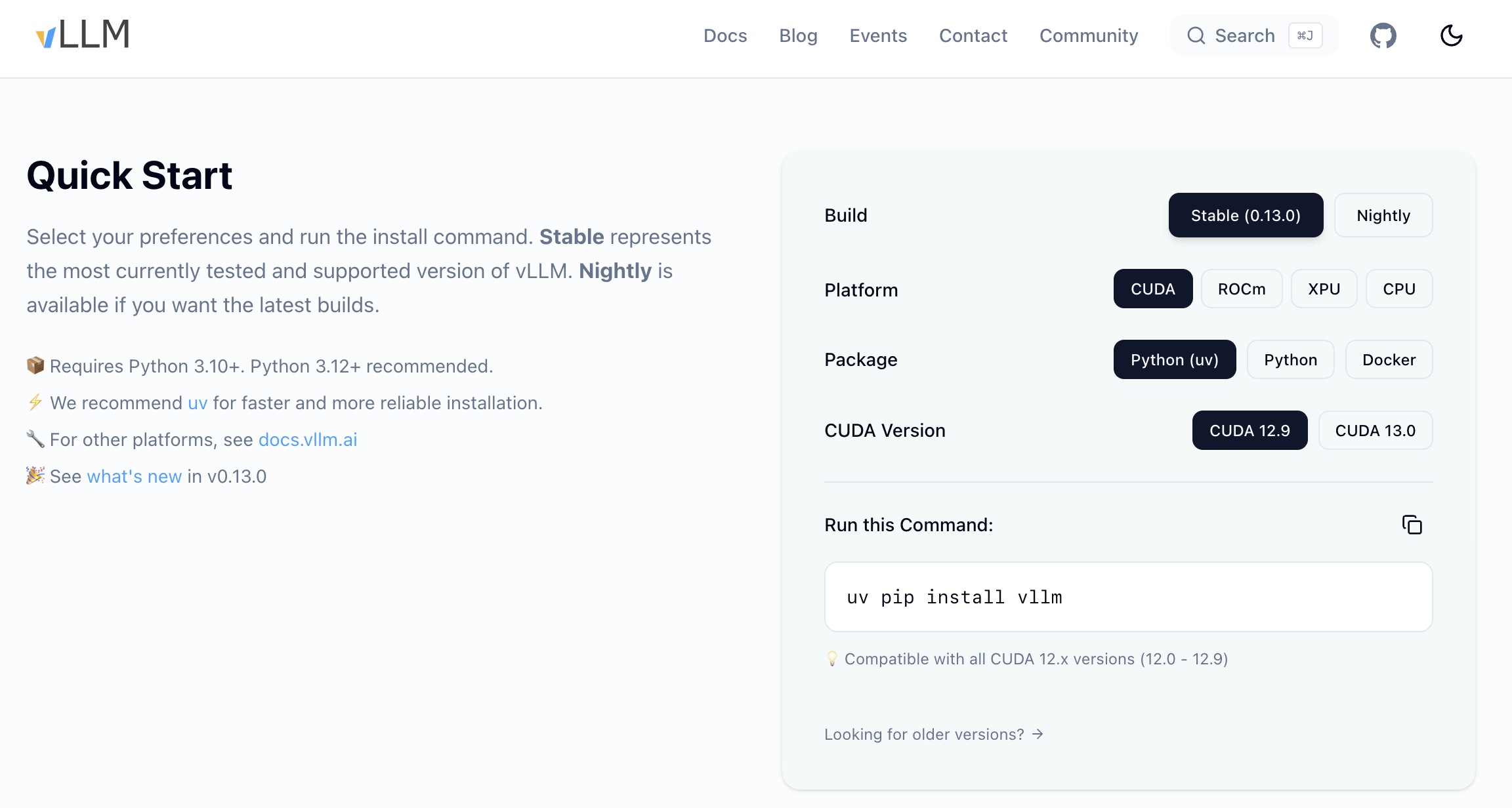

The new website features an installation selector to guide users in installing vLLM across various environments.

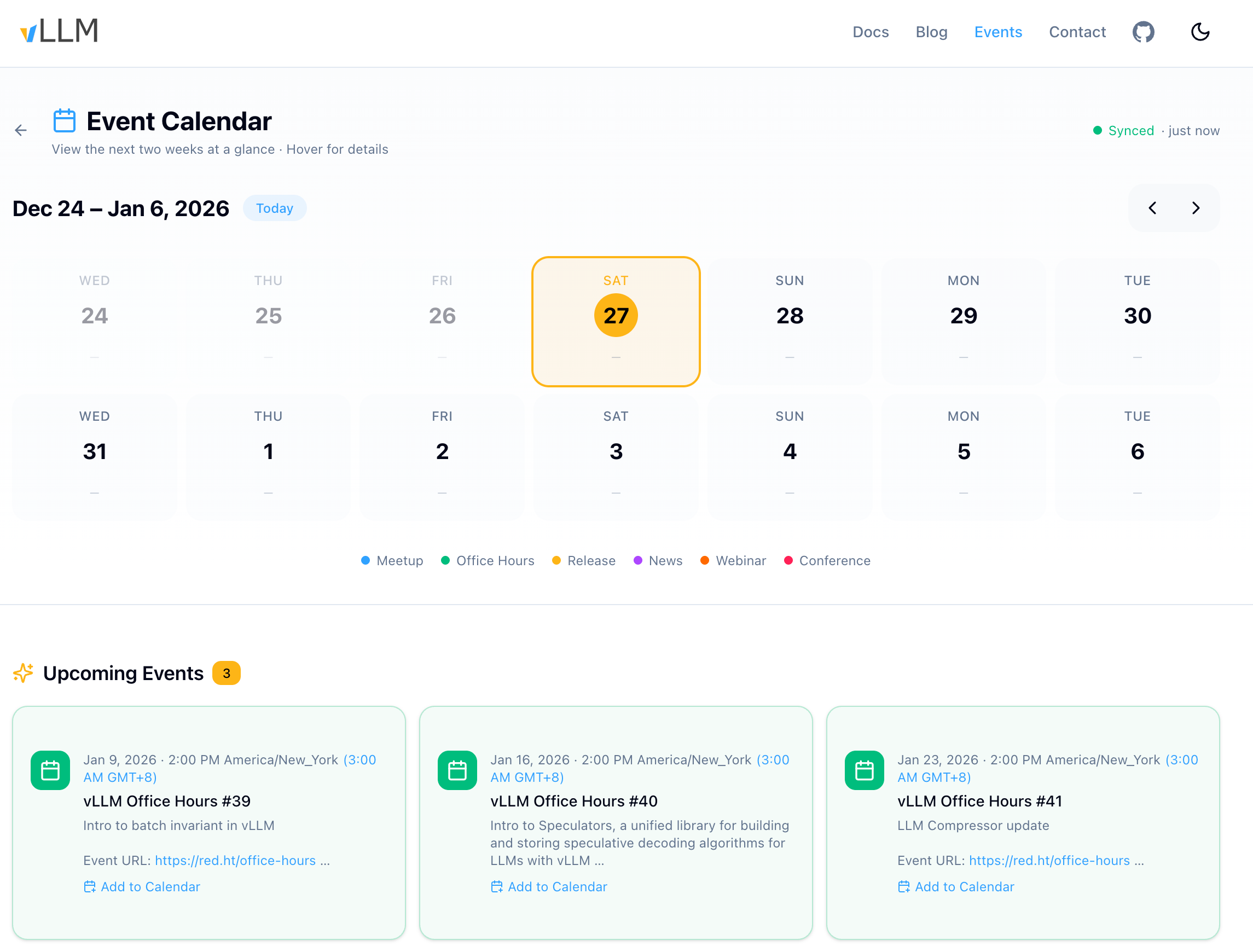

The website also includes an “Events” page to track all community events and logistics updates.

Why a New Website?

The motivation behind this change is clear: we need to separate the maintenance of community events and logistics updates from the GitHub project. Previously, almost all information about vLLM was hosted on GitHub, with event announcements and meetup slides added through pull requests. This process placed an unnecessary burden on developers who wanted to focus on code development.

Going forward, we will move most community events and logistics updates from the GitHub project to the vLLM website, allowing the GitHub project to focus more on code development.

One potential drawback is that people can no longer submit pull requests to request changes as they currently do. To address this, we’ve created a new contact email website-feedback@vllm.ai. If you have any suggestions to improve the website, please send an email to this address, and we will review and update accordingly.

New Community Communication Email Addresses

In addition to the new website, we’ve added several new email addresses for community communication:

- talentpool@vllm.ai - Submit your resume for internships and full-time positions. We will forward resumes to our partner companies to give you more exposure. LLM inference is in high demand, and our partner companies are eager to hire talented engineers.

- collaboration@vllm.ai - For partner companies interested in accessing resumes, organizing meetups, or technical partnerships. We are open to collaborating with any company interested in using vLLM in their products or services. This will gradually replace the existing functionality of vllm-questions@lists.berkeley.edu.

- social-promotion@vllm.ai - For social media promotion collaborations (Twitter/X, LinkedIn, RedNote, WeChat, etc.). If you have anything interesting to share about vLLM, please send an email to this address, and we will review and promote it.

New Community Tools

To help the community keep track of vLLM’s progress, we’ve created a new repository called vLLM Daily. It summarizes the changes in vLLM every day. You can subscribe to the updates by adding https://github.com/vllm-project/vllm-daily/commits/main.atom to your favorite RSS reader.

Conclusion

From a research project to a widely used production inference engine, vLLM would not be where it is today without the incredible support from our community. We’re excited to continue building the future of LLM inference together!